Vector product

A vector product, also known as cross product, is an antisymmetric product A×B = −B×A of two vectors A and B in 3-dimensional Euclidean space ℝ3. The vector product is again a 3-dimensional vector. The vector product is widely used in many areas of mathematics, mechanics, electromagnetism, gravitational fields, etc.

A proper vector changes sign under inversion, while a cross product is invariant under inversion [both factors of the cross product change sign and (−1)×(−1) = 1]. A vector that does not change sign under inversion is called an axial vector or pseudo vector. Hence a cross product is a pseudo vector. A vector that does change sign is often referred to as a polar vector in this context.

Contents |

[edit] Definition

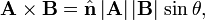

Given two vectors, A and B in ℝ3, the vector product is a vector with length AB sin θ where A is the length of A, B is the length of B, and θ is the smaller (non-reentrant) angle between A and B. The direction of the vector product is perpendicular (or normal) to the plane containing the vectors A and B and follows the right-hand rule,

where  is a unit vector normal to the plane spanned by A and B in the right-hand rule direction, see Fig. 1 (in which vectors are indicated by lowercase letters).

is a unit vector normal to the plane spanned by A and B in the right-hand rule direction, see Fig. 1 (in which vectors are indicated by lowercase letters).

We recall that the length of a vector is the square root of the dot product of a vector with itself, A ≡ |A| = (A⋅A )1/2 and similarly for the length of B. A unit vector has by definition length one.

From the antisymmetry A×B = −B×A follows that the cross (vector) product of any vector with itself (or another parallel or antiparallel vector) is zero because A×A = − A×A and the only quantity equal to minus itself is the zero. Alternatively, one may derive this from the fact that sin(0) = 0 (parallel vectors) and sin(180) = 0 (antiparallel vectors).

[edit] The right hand rule

The diagram in Fig. 1 illustrates the direction of a×b, which follows the right-hand rule. If one points the fingers of the right hand towards the head of vector a (with the wrist at the origin), then moves the right hand towards the direction of b, the extended thumb will point in the direction of a×b. If a and b are interchanged the thumb will point downward and the cross product has the opposite sign.

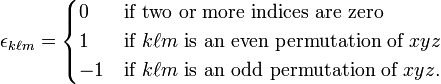

[edit] Definition with the use of the Levi-Civita symbol

A concise definition of the vector product is by the use of the antisymmetric Levi-Civita symbol,

(See this article for the definition of odd and even permutations.)

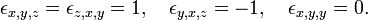

Examples,

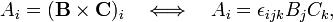

In terms of the Levi-Civita symbol the component i of the vector product is defined as,

where summation convention is implied. That is, repeated indices are summed over (in this case sum is over j and k).

[edit] Another formulation of the cross product

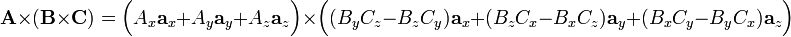

Rather than in terms of angle and perpendicular unit vector, another form of the cross product is often used. In the alternate definition the vectors must be expressed with respect to a Cartesian (orthonormal) coordinate frame ax, ay and az of ℝ3.

With respect to this frame we write A = (Ax, Ay, Az) and B = (Bx, By, Bz). Then

- A×B = (AyBz - AzBy) ax + (AzBx - AxBz) ay + (AxBy - AyBx) az.

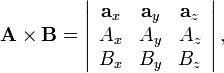

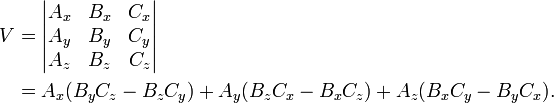

This formula can be written more concisely upon introduction of a determinant:

where  denotes the determinant of a matrix. This determinant must be evaluated along the first row, otherwise the equation does not make sense.

denotes the determinant of a matrix. This determinant must be evaluated along the first row, otherwise the equation does not make sense.

[edit] Geometric representation of the length

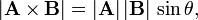

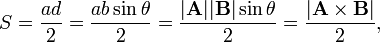

The length of the cross product of vectors A and B is equal to

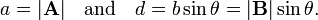

because  has by definition length 1. Using the high school geometry rule: the area S of a triangle is its base a times its half-height d, we see in Fig. 2 that the area S of the dotted triangle is equal to:

has by definition length 1. Using the high school geometry rule: the area S of a triangle is its base a times its half-height d, we see in Fig. 2 that the area S of the dotted triangle is equal to:

because, as follows from Fig. 2:

Hence |A×B| = 2S. Since the dotted triangle with sides a, b, and c is congruent to the dashed triangle, the area of the dotted triangle is equal to the area of the dashed triangle and the length 2S of the cross product is equal to the sum of the areas of the dotted and the dashed triangle.

In conclusion:

- The length (magnitude) of A×B is equal to the area of the parallelogram spanned by the vectors A and B.

[edit] Application: the volume of a parallelepiped

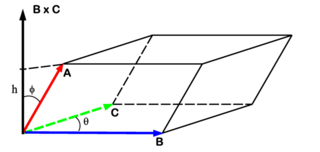

The volume V of the parallelepiped shown in Fig. 3 is given by

Indeed, remember that the volume of a parallelepiped is given by the area S of its base times its height h, V = Sh. Above it was shown that, if

The height h of the parallelepiped is the length of the projection of the vector A onto D. The dot product between A and D is |D| times the length h,

so that V = (B×C)⋅A = A⋅(B×C).

It is of interest to point out that V can be given by a determinant that contains the components of A, B, and C with respect to a Cartesian coordinate system,

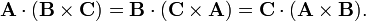

From the permutation properties of a determinant it follows

The product A⋅(B×C) is often referred to as a triple scalar product. It is a pseudo scalar. The term scalar refers to the fact that the triple product is invariant under the same simultaneous rotation of A, B, and C. The term pseudo refers to the fact that simultaneous inversion

- A → −A, B → −B, and C → −C

converts the triple product into minus itself, while a proper scalar is invariant under inversion.

[edit] Cross product as linear map

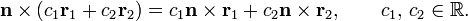

Given a fixed vector n, the application of n× is linear,

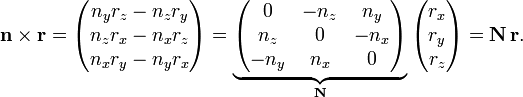

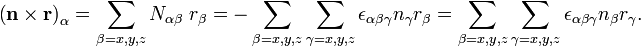

This implies that n×r can be written as a matrix-vector product,

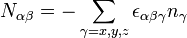

The matrix N has as general element

where εαβγ is the antisymmetric Levi-Civita symbol. It follows that

[edit] Relation to infinitesimal rotation

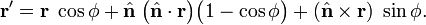

The rotation of a vector r around the unit vector  over an angle φ sends r to r′. The rotated vector is related to the original vector by (see this article for a proof):

over an angle φ sends r to r′. The rotated vector is related to the original vector by (see this article for a proof):

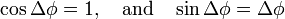

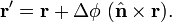

Suppose now that φ is infinitesimal and equal to Δφ, i.e., squares and higher powers of Δφ are negligible with respect to Δφ, then

so that

The linear operator  maps ℝ3→ℝ3 ; it is known as the generator of an infinitesimal rotation around

maps ℝ3→ℝ3 ; it is known as the generator of an infinitesimal rotation around  .

.

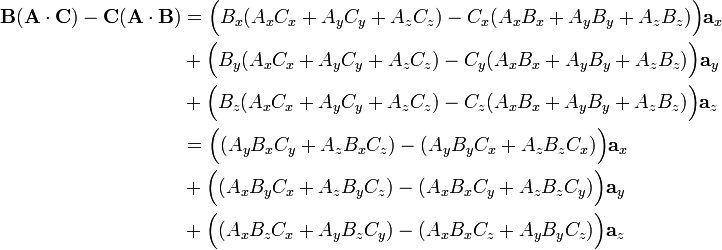

[edit] Triple product

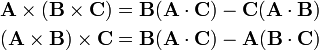

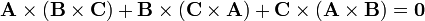

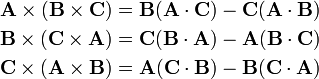

It holds that

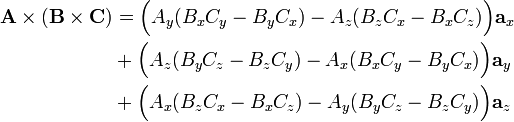

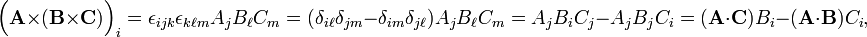

The proof is straigthforward. Upon writing the vectors in terms of a Cartesian basis ax, ay, az the first step is,

The second step is

On the other hand

Comparison of the coefficients of the basis vectors proves the equality of the first triple product. Obviously the proof of the second triple product runs along the same lines.

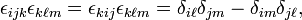

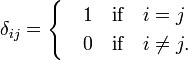

A more elegant proof is with the aid of the antisymmetric Levi-Civita symbol, that satisfies the following property (summation convention is implied, that is, repeated indices are summed over):

where δij is a Kronecker delta,

Consider

which again gives the result for the first triple product.

The triple product satisfies the Jacobi identity

This is proved by adding the following relations

and using A⋅ B = B⋅ A, etc.

[edit] Generalization

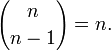

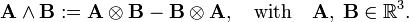

[edit] Twofold wedge product

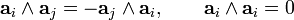

From a somewhat more abstract point of view one may define the vector product as an element of the antisymmetric subspace of the 9-dimensional tensor product space ℝ3⊗ℝ3. This antisymmetric subspace is of dimension 3. An element of the space is sometimes written as a wedge product,

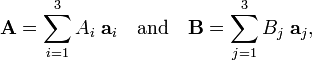

If a1, a2. a3 is an orthonormal basis of ℝ3 and

then upon noting that

it follows that

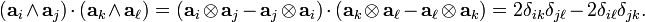

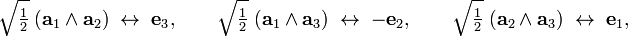

The basis elements are orthogonal (and easily normalized)

Make the identification:

and it follows that the new vectors form an orthonormal basis,

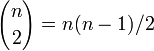

The wedge product corresponds to

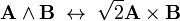

and it is concluded that cross product can be identified with the wedge product (up to the factor √2)

[edit] Threefold wedge product

The association between wedge product and vector product does not hold in the case of 3-vectors (members of three-dimensional spaces) for more than two factors. Above it is shown how to expand the 3-vector A×(B×C) in terms of B and C (3-vectors), while the wedge product is the antisymmetrized projection of A⊗B⊗C:

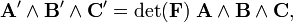

This product is non-vanishing if and only if A, B, and C are linearly independent (hence a fourfold wedge product of 3-vectors vanishes). Furthermore, if A is multiplied by the 3×3 matrix F, A′ = FA, and B′ and C′ are defined also by multiplication with F, then

where det(F) is the determinant of the matrix F. If the determinant is equal to unity (as for proper rotation matrices) the threefold wedge product of 3-vectors is invariant, a scalar.

[edit] General wedge product

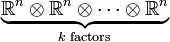

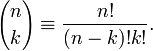

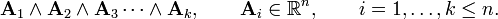

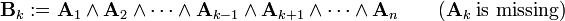

In general the antisymmetric subspace of the k-fold tensor power (k-fold tensor product of the same n-dimensional space, k ≤ n):

is of dimension

Elements of the antisymmetric subspace of tensor power are wedge or exterior products written as

The antisymmetric products may be projected from the tensor power by means of the antisymmetrizer. The antisymmetric subspace of a two-fold tensor product space is of dimension

.

.

The latter number is equal to 3 only if n = 3. For instance, for n = 2 or 4, the antisymmetric subspaces are of dimension 1 and 6, respectively.

It can be shown that a wedge product consisting of n−1 factors transforms as a vector in ℝn. In that sense the antisymmetric product Bk

is the generalization of a vector product. Clearly Bk belongs to a space of dimension

In the case of n−1 factors one can make the association between wedge products and vectors, in the same way as for the n = 3 vector product. The n-dimensional space spanned by the wedge products Bk (k =1,2, ..., n) is the dual of ℝn.[1]

[edit] Note

- ↑ In many-fermion physics a vector Ak corresponds to a fermionic particle in orbital k and Bk corresponds to a hole in orbital k; holes and particles transform contragrediently.

[edit] External link

The very first textbook on vector analysis (Vector analysis: a text-book for the use of students of mathematics and physics, founded upon the lectures of J. Willard Gibbs by Edwin Bidwell Wilson, Yale University Press, New Haven, 1901) can be found here. In this book the synonyms: skew product, cross product and vector product are introduced.

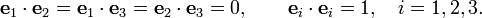

![\begin{align}

\mathbf{A}\wedge\mathbf{B} &=

\left(\sum_{i=1}^{3} A_i \mathbf{a}_i \right) \wedge \left(\sum_{j=1}^{3} B_j \mathbf{a}_j \right) = \sum_{i,j=1}^3 A_i B_j \; (\mathbf{a}_i \wedge\mathbf{a}_j) \\

&= \sum_{i<j} A_iB_j\; (\mathbf{a}_i \wedge\mathbf{a}_j) + \sum_{i>j} A_iB_j (\mathbf{a}_i \wedge\mathbf{a}_j) \\

&= \sum_{i<j}\left[ A_iB_j\; (\mathbf{a}_i \wedge\mathbf{a}_j) + A_jB_i\; (\mathbf{a}_j \wedge\mathbf{a}_i) \right] \\

&= \sum_{i<j}\left[ A_iB_j - A_jB_i \right]\; (\mathbf{a}_i \wedge\mathbf{a}_j). \\

\end{align}](../w/images/math/2/3/a/23a56af31de2468f8439e09b215eff9d.png)

![\mathbf{A}\wedge\mathbf{B}\; \leftrightarrow \;

\sqrt{2}\Big[ (A_1B_2 - A_2B_1)\; \mathbf{e}_3 - (A_1B_3 - A_3B_1)\; \mathbf{e}_2 +

(A_2B_3 - A_3B_1)\; \mathbf{e}_1 \Big],](../w/images/math/6/0/a/60a3c8126bd0cc4f989bb28cc5decf55.png)